Lack of good evaluation of participatory projects is probably the greatest contributing factor to their slow acceptance and use in the museum field. Evaluation can help you measure the impact of past projects and advocate for future initiatives. It helps you articulate and share what worked and what didn’t. Particularly in an emerging field of practice, evaluation can help professionals learn from and support each other’s progress.

While participatory projects do not require fundamentally different evaluation techniques from other types of projects, there are four considerations that make participatory projects unique when it comes to their assessment:

- Participatory projects are about both process and product. Participatory projects require people to do something for them to work, which means evaluation must focus on participant behavior and the impact of participatory actions. It is not useful to merely catalog the participatory platforms institutions offer—the number of comment boards, participatory exhibit elements, or dialogue programs provided. Evaluators must measure what participants do and describe what happens as a result of participation. Participatory outcomes may be external, like increased incidence of conversation among visitors, and internal, such as development of new skills or enhanced relationships.

- Participatory projects are not just for participants. It is important to define goals and assess outcomes not only for participants, but for staff members and non-participating audiences as well. For each project, you should be able to articulate goals for the participants who actively collaborate with the institution, for the staff members who manage the process, and for the audience that consumes the participatory product.

- Participatory projects often benefit from incremental and adaptive measurement techniques. Many participatory projects are process-based. If you are going to work with community members for three years to design a new program, it’s not useful to wait until the end of the three years to evaluate the overall project. Incremental assessment can help complex projects stay aligned to their ultimate goals while making the project work for everyone involved.

- Sometimes, it is beneficial to make the evaluative process participatory in itself. When projects are co-designed by institutions with community members, it makes sense to involve those participants in the development and implementation of project evaluations. This is particularly true for co-creative and hosted projects in which participants have a high level of responsibility for the direction of the project.

Evaluating Impact

Evaluating the impact of participatory projects requires three steps:[1]

- Stating your goals

- Defining behaviors and outcomes that reflect those goals

- Measuring or assessing the incidence and impact of the outcomes via observable indicators

Goals drive outcomes that are measured via indicators. These three steps are not unique to evaluating participatory projects, but participatory projects frequently involve goals and outcomes that are different from those used to evaluate traditional museum projects.

Recall the wide-ranging indicators the Wing Luke Asian Museum uses to evaluate the extent to which it achieves its community mission (see page 286). When it comes to participants, the staff assesses the extent to which “people contribute artifacts and stories to our exhibits.”[2] With regard to audience members, staff track whether “constituents are comfortable providing both positive and negative feedback” and “community members return time and time again.” Institutionally, they evaluate employees’ “relationship skills” and the extent to which “young people rise to leadership.” And with regard to broader institutional impact, they even look at the extent to which “community responsive exhibits become more widespread in museums.” These outcomes and indicators may be atypical, but they are all measurable. For example, the metric around both positive and negative visitor comments is one that reflects their specific interest in supporting dialogue, not just receiving compliments. Many museums review comments from visitors, but few judge their success by the presence of negative as well as positive ones.

Step 1: Articulating Participatory Goals

The first step to evaluating participatory projects is to agree on a clear list of goals. Particularly when it comes to new and unfamiliar projects, staff members may have different ideas about what success looks like. One person may focus on sustained engagement with the institution over time, whereas another might prioritize visitor creativity. Clear participatory goals can help everyone share the same vision for the project or the institution.

This simple diagram helps MLS staff members evaluate potential programs against institutional goals.

Goals for engagement do not have to be specific to individual projects; they can also be generalized to participatory efforts throughout the institution. For example, at the Museum of Life and Science (MLS), Beck Tench created a honeycomb diagram to display the seven core goals MLS was trying to achieve across their forays into social participation: to educate, give a sense of place, establish transparency, promote science as a way of knowing, foster dialogue, build relationships, and encourage sharing. This diagram gave staff members at MLS a shared language for contextualizing the goals they might apply to participatory projects.

The diagram became a planning tool. For proposed social media experiments, Tench and other MLS staff members shaded the cells of the honeycomb to identify which goals they felt that project would target. This helped them prioritize their ideas and be aware of potential imbalances in their offerings. Later, staff teams used the diagrams to reflect on the extent to which the goals they expected to achieve were met in implementation.

The honeycomb diagram is a simple framework that MLS staff members can apply against a range of projects at different points in their planning and implementation. It is accessible to team members of all levels of evaluation experience and expertise, including visitors and community participants. This makes it easy for everyone to use and understand.

Step 2: Defining Participatory Outcomes

Shared goals provide a common vocabulary to help staff members talk about their aspirations. Outcomes are the behaviors that the staff perceives as indicative of goals being met. Outcomes and outputs are two different things. For example, consider the participatory goal for an institution to become a “safe space for difficult conversations.” The starting point for many museums with this goal would be to host exhibits or programs on provocative topics likely to stir up “difficult conversations.” But offering an exhibition about AIDS or a panel discussion about racism neither ensures dialogue nor the perception of a safe space. An exhibition is an output, but it does not guarantee the desired outcomes.

What are the outcomes associated with a “safe space for difficult conversations?” Such a space would:

- Attract and welcome people with differing points of view on contentious issues

- Provide explicit opportunities for dialogue among participants on tough issues

- Facilitate dialogue in a way that makes participants feel confident and comfortable expressing themselves

- Make people feel both challenged and supported by the experience

Each of these is an outcome that can be measured. For example, imagine a comment board in an exhibition intended to be a “safe space for difficult conversations.” Staff members could code:

- The range of divergent perspectives demonstrated in the comments

- The tone of the comments (personal vs. abstract, respectful vs. destructive)

- The extent to which visitors responded to each other’s comments

Researchers could also conduct follow-up interviews to ask visitors whether they contributed to the comment board, how they felt about the comments on display, their level of comfort in sharing their own beliefs, the extent to which their perspective was altered by the experience of expressing their own ideas and/or reading others, and whether they would seek out such an experience in the future.

Developing Meaningful Measurement Tools

Once staff members know what goals they are trying to achieve and what outcomes reflect those goals, they can develop evaluative tools to assess the incidence of the outcomes. This is often the most challenging part of evaluation design, and it requires thinking creatively about what behaviors or indicators are associated with desired outcomes.

The New Economics Foundation has defined four qualities of effective evaluative indicators: action-oriented, important, measurable, and simple.[3] Imagine developing indicators to reflect this goal for an educational program: “visitors will have deeper relationships with the staff.” What measurable indicators would provide valuable information about relationships between staff members and visitors? How could assessment help staff members develop new strategies or practices to improve incidence of the outcome in future projects?

To assess this outcome, you might consider the following indicators:

- Whether staff members and participants could identify each other by name at different stages during and after the program

- The volume and type of correspondence (email, phone, social networks) among participants and staff outside of program time

- Whether staff members and participants stayed in touch at fixed time intervals after the program was over

These indicators might help determine what kinds of behaviors are most effective at promoting “deeper relationships” and encourage the staff to adjust their actions accordingly. For example, some educators (like the volleyball teacher described on page 39) find it particularly valuable to learn students’ names and begin using them from the first session. These kinds of discoveries can help staff members focus on what’s important to achieve their goals.

CASE STUDY: Engaging Front-Line Staff as Researchers at the Museum of Science and Industry Tampa

When indicators are simple to measure and act on, staff members and participants at all levels of research expertise can get involved in evaluation. For example, at the Museum of Science and Industry in Tampa (MOSI), front-line staff members were engaged as researchers in the multi-year REFLECTS project. The goal of REFLECTS was to train educators to be able to scaffold family visitor experiences in ways that would encourage “active engagement” (as opposed to passive disinterest). To make it possible for front-line educators to effectively recognize when visitors were and were not actively engaged in their experience, the research team developed a list of eleven visitor behaviors that they felt indicated active engagement, including: visitors making comments about the exhibit, asking and answering each other’s questions, making connections to prior experiences, and encouraging each other’s behaviors.

Educators made video and audio recordings of their interactions with families and then went back later to code the recordings for those eleven indicators. The REFLECTS team didn’t judge the content of the cues (i.e. whether a visitor asked a personal question or a science-focused one), just tallied their incidence. And then educators headed back out on the floor to adjust their behavior and try again.

At the 2009 Association of Science and Technology Centers conference, MOSI staff members showed video of themselves engaging with visitors before and after working in the REFLECTS program, and the difference was impressive. The educators didn’t communicate more or better content in the “after” videos. Instead, they did a better job supporting visitors’ personal connection to the exhibits, rather than trying, often unsuccessfully, to coerce visitors into engagement.

The REFLECTS research served three audiences: visitors, front-line staff, and the institution. For visitors, it improved the quality of staff interactions. For the front-line staff, it provided empowerment and professional development opportunities. For the institution, it made front-line interactions with visitors more effective. The primary researcher, Judith Lombana, had observed that museums spend a lot of time engaging with visitors in ways that do not improve engagement or learning. This is a business problem. As she put it: “waste occurs with activities or resources that some particular guest does not want.” By finding action-oriented, important, simple measures for active engagement, the REFLECTS team was able to create an evaluation strategy that succeeded on multiple levels.

Evaluation Questions Specific to Participation

Because participatory practices are still fairly new to cultural institutions, there are few comprehensive examples of evaluation techniques and instruments specific to participatory projects. Traditional evaluation techniques, like observation, tracking, surveys, interviews, and longitudinal studies are all useful tools for assessing participatory projects. However, because participatory projects often involve behaviors that are not part of the traditional visitor experience, it’s important to make sure that evaluation instruments will capture and measure the distinct experiences offered.

If your institution’s standard summative evaluation of an exhibition or program is about the extent to which visitors have learned specific content elements, switching to an evaluative tool that allows the staff to assess the extent to which visitors have exercised creative, dialogic, or collaborative functions can be quite a leap.

To design successful evaluative tools for participatory projects:

- Review the specific skills and values that participatory experiences support (see page 211) to determine which kinds of indicators might reflect your project’s goals

- Take a 360 degree approach, looking at goals, outcomes, and indicators for staff members, participants, and non-participating visitors

- Consult with participants and project staff members to find out what outcomes and indicators they think are most important to measure

Here are some specialized questions to consider that pertain specifically to the participant, staff, and visitor experience of participatory projects.

Questions about participants:

- If participation is voluntary, what is the profile of visitors who choose to participate actively? What is the profile of visitors who choose not to participate?

- If there are many forms of voluntary participation, can you identify the differences among visitors who choose to create, to critique, to collect, and to spectate?

- How does the number or type of model content affect visitors’ inclination to participate?

- Do participants describe their relationship to the institution and/or to staff in ways that are distinct from the ways other visitors describe their relationship?

- Do participants demonstrate new levels of ownership, trust, and/or understanding of institutions and their processes during or after participation?

- Do participants demonstrate new skills, attitudes, behaviors, and/or values during or after participation?

- Do participants seek out more opportunities to engage with the institution or to engage in participatory projects?

Questions about staff:

- How do participatory processes affect staff members’ self-confidence and sense of value to the institution?

- Do staff members demonstrate new skills, attitudes, behaviors, and/or values during or after participation?

- Do staff members describe their relationships to colleagues and or visitors as altered by participation?

- Do staff members describe their roles differently during or after participation?

- How do staff members perceive the products of participation?

- Do staff members seek out more opportunities to engage in participatory projects?

Questions about non-participating visitors who watch or consume the products of participation (exhibits, programs, publications):

- Do visitors describe products created via participatory processes differently from those created via traditional processes? Do they express comparative opinions about these products?

- If participation is open and voluntary, do visitors understand the opportunity to participate?

- Why do visitors choose not to participate? What would make them interested in doing so?

Because participation is diverse, no single set of questions or evaluative technique is automatically best suited to its study. There are researchers in motivational psychology, community development, civic engagement, and human-computer interaction whose work can inform participatory projects in museums.[4] By partnering with researchers from other fields, museum evaluators can join participatory, collaborative learning communities to the mutual benefit of all parties.

CASE STUDY: Studying the Conversations on Science Buzz

Imagine a project that invites visitors to engage in dialogue around institutional content. How would you study and measure their discussions to determine whether users were just chatting or really engaging around the content of interest?

In 2007, the Institute of Museum and Library Services (IMLS) funded a research project called Take Two to address this question. Take Two brought together researchers in the fields of rhetoric, museum studies, and science education to describe the impact of a participatory project called Science Buzz that invited visitors to engage in dialogue on the Web about science.

Science Buzz is an award-winning online social network managed by the Science Museum of Minnesota.[5] It is a multi-author community website and exhibit that invites museum staff members and outside participants to write, share, and comment on articles related to contemporary science news and issues. Science Buzz also includes physical museum kiosks located in several science centers throughout the US, but the Take Two study focused on the online discourse.

Science Buzz is a complicated beast. From 2006 to 2008, staff members and visitors posted and commented on over 1,500 topics, and the blog enjoyed high traffic from an international audience. While the museum had conducted internal formative evaluation on the design and use of Science Buzz,[6] the staff was interested in conducting research on how users interacted with each other on the website and what impact it had on their learning.

That’s where Take Two came in. Because Science Buzz is a dialogue project, it made sense to work with researchers from the field of rhetoric. Jeff Grabill, a Michigan State University professor who focuses on how people use writing in digital environments, led the research. The Take Two team focused their study on four questions:

- What is the nature of the community that interacts through Science Buzz?

- What is the nature of the on-line interaction?

- Do these on-line interactions support knowledge building for this user community?

- Do on-line interactions support inquiry, learning, and change within the museum – i.e., what is the impact on museum practice?[7]

The first two questions are descriptive and focus on better understanding the user profile and the dialogic ways that people engage with each other on the website. The last two are about impact outcomes both for participants and for the staff. Since the researchers were examining historic blog posts, they did not have access to non-participating audience members. They did not study the impact on those who consume the content on Science Buzz but do not contribute content to the site themselves.

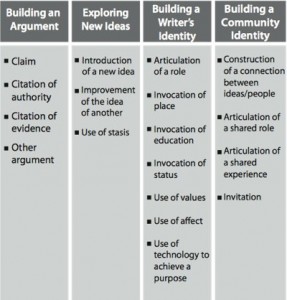

To evaluate the knowledge building impact of Science Buzz, the researchers coded individual statements in blog posts and comments for twenty percent of posts with fifteen comments or more, grouping them into four categories: “building an argument,” “exploring new ideas,” “building a writer’s identity,” and “building a community identity.” Staff members associated each statement with one of these four categories using a comprehensive set of descriptive indicators (see table).

The Take Two research team coded comments on Science Buzz based on the incidence of these indicators.

By coding individual statements, researchers were able to spot patterns in argumentation used on the site that represented different forms of individual and or interpersonal knowledge building. For the representative sample used, the researchers found the following overall distribution of statement types:

- Building an argument – 60%

- Building a writer’s identity – 25%

- Building community identity – 11.4%

- Exploring new ideas – 1.8%

This data demonstrated that Science Buzz users were definitely using the blog to make arguments about science, but not necessarily to construct knowledge communally. For this reason, the Take Two team shifted its research in the third year of the study, away from “co-construction of knowledge” and toward a broader examination of “learning.”

They used the National Academies of Science’s 2009 Learning Strands in Informal Environments (LSIE) report as the basis for the development of new indicators with which to code Science Buzz conversations.[8] The LSIE report presented six “strands” of science learning, including elements like identity-building, argumentation, and reflection that were clearly visible in Science Buzz discourse. As of January 2010, this research is still ongoing. Dr. Kirsten Ellenbogen, Director of Evaluation and Research in Learning at the Science Museum of Minnesota, commented:

The LSIE report stated that science argumentation is rare in museum exhibits, and it suggested that informal environments were a long way from providing the necessary instruction to support scientific argumentation.[9] But Science Buzz is a natural dialogue setting, and we felt like we were seeing scientific argumentation and debate happening all over the site. The Take Two research gave us evidence to support that.

By partnering with researchers in the field of rhetoric, the Science Buzz team was better able to understand and describe the nature and potential impact of conversation on the website. The research also revealed new questions for study both on Science Buzz and other online dialogue sites. One of the findings of Take Two was that identity-building statements are often intertwined with scientific arguments, and it is important to understand who a person is as well as what they say. This may sound obvious, but as Grabill noted, rhetoriticians frequently separate what they consider rational statements from affective or “identity work” statements, which are considered less important to argumentation. While the first phase of the research focused entirely on types of statements made in discrete, anonymized comments, the second phase included examination of the particular role of staff participants in promoting learning. In future research of Science Buzz, it’s possible to go even further, examining how individual users’ interactions with the site over time impact their learning, self-concept, and contribution to the community.

Part of the challenge of the Take Two project was simply developing the analytic tools to study a familiar question (science knowledge-building) in a new environment (online social network). The team focused on mission-driven questions, found reasonable tools to answer those questions, rigorously applied those tools, and published the results. Hopefully many future teams will approach research on visitor participation with a comparable level of rigor, creativity, and interest in sharing lessons learned with the field.

Incremental and Adaptive Participatory Techniques

While formal evaluation is typically separated into discrete stages—front-end, formative, remedial, summative—participatory projects often benefit from more iterative approaches to assessment. One of the positive aspects of participatory projects is that they don’t have to be fully designed before launch. Participatory projects are often released bit by bit, evolving in response to what participants do. This can be messy and can involve changing design techniques or experimental strategies along the way, which, as noted in the stories of The Tech Virtual and Wikipedia Loves Art, can either alleviate or increase participant frustration. While changes may be frustrating and confusing, they are often essential to keep an experimental project going in the right direction.

Adaptive evaluation techniques are particularly natural and common to the Web for two reasons. First, collecting data about user behavior is fairly easy. There are many free analytical tools that allow Web managers to capture real-time statistics about who visits which pages and how they use them. These tools automate data collection, so staff members can focus on working with the results rather than generating them.

Second, most Web designers, particularly those working on social websites, expect their work to evolve over time. Most Web 2.0 sites are in “perpetual beta,” which means that they are released before completion and remain a work-in-progress—sometimes for years. This allows designers to be responsive to observed user behaviors, altering the platform to encourage certain actions and minimize or eliminate others.

Adaptive evaluation can help designers and managers see where they are and aren’t hitting their goals and adjust their efforts accordingly. For example, the Powerhouse Museum children’s website features a popular section called “Make & Do” which offers resources for family craft activities.[10] Each craft activity takes about two weeks of staff time to prepare, so the team decided to use Web analytics to determine which activities were most popular and use those as a guide for what to offer next.

In the site’s first two years, Web analytics showed that the most popular craft activity by far was Make a King’s Crown, which provides templates and instructions to cut out your own royal headgear. At first, the staff responded by producing similar online craft resources, providing templates for wizards’ hats, jester hats, samurai helmets, and masks. Then, the Web team dug a little deeper into the Web metrics and realized that the vast majority of the visitors to the King’s Crown page were based outside Australia. When they looked at the geographic detail for the Make & Do section, they found that gardening and Easter-related activities were far more popular with Australian and Sydney residents than various forms of hat making. Because the Powerhouse is a majority state-funded museum (and because the children’s website was intended to primarily provide pre- and post-visit experiences), their priority is local audiences. They decided to redirect future craft resources away from headgear and towards activities that were of more value and interest to Australians from New South Wales.[11]

Adaptive evaluation can be applied to physical venues and visitor experiences, but it’s not easy. Cultural institutions tend to lack both the Web’s automated analytic tools and flexible growth pattern. If the educational program and exhibition schedule is set months or even years in advance, it is unlikely that staff members will be able to shift gears based on visitor input. This is one of the reasons that remedial evaluation can be so painful in museums; even if staff members would like to make changes to improve the visitor experience based on observed problems, it’s hard to find the money or time to do so. Many staff members can also get focused on “seeing it through” and may be concerned about tainting data by making changes in mid-stream. Encouraging adaptive evaluation requires cultivating a culture of experimentation.

When it comes to physical platforms for participation, it’s not necessary to design the perfect system behind the scenes. Release it unfinished, see what visitors do, and adjust accordingly. If projects are truly designed to “get better the more people use them,” then there’s a built-in expectation that they will grow and change over their public life. Staff members can be part of that growth and change through adaptive evaluation.

Continual evaluation can also provide a useful feedback loop that generates new design insights that can ultimately lead to better visitor experiences. Consider the humble comment board. If there is one in your institution, consider changing the material provided for visitors to write on or with and see how it affects the content and volume of comments produced. Change the prompt questions, add a mechanism by which visitors can easily write “response cards” to each other, or experiment with different strategies for staff curation/comment management. By making small changes to the project during operation, you can quickly see what kinds of approaches impact the experience and how.

Involving Participants in Evaluation

One of the underlying values of participatory projects is respect for participants’ opinions and input. Because project ownership is often shared among staff members and participants, it makes sense to integrate participants into evaluation as well as design and implementation. This doesn’t mean evaluating participant experiences, which is part of most institutional evaluative strategies. It means working with participants to plan, execute, and distribute the evaluation of the project.

Adaptive projects often include casual ways for participants to offer their feedback on projects at any time, either by communicating with project staff members or sharing their thoughts in community meetings or forums. Particularly when participants feel invested in a project, either as contributors or as full co-creative partners, they want to do what they can to help improve and sustain the project’s growth. Participants may notice indicators that are not readily apparent to project staff, and they can offer valuable input on the most effective ways to measure and collect data related to their experiences.

For example, the St. Louis Science Center’s Learning Places project, in which teenagers designed science exhibits for local community centers, was evaluated in two ways: by an external evaluator and by internal video interviews with the teenage participants.[12] The external evaluation focused on the teens’ understanding and retention of science, technology, engineering, and mathematics concepts, whereas the participants’ interviews focused on the project’s impact on teens’ educational and career choices. These different evaluative techniques reflected two different sets of measurement goals and priorities. While the funder (the National Science Foundation) was interested in how Learning Places promoted science learning, the teen participants and the project staff were interested in how the program impacted personal and professional development.

Involving community members in the design and implementation of evaluative techniques is not easy. Participatory evaluation requires additional resources. For many institutions, it may be too expensive to sustain participatory partnerships through the research stage, or participants may not be able to continue their involvement beyond the co-design project.

Participatory evaluation is also challenging because it requires a radical shift in thinking about the audience for the research and how it will be used. At least in the museum field, researchers and practitioners are still working out how visitor studies can be most useful to actually impact how institutions function. It’s an even further step to suggest that not only should visitors’ reactions and experiences partly guide professional practice, but that their goals should drive research just as much as institutional goals. This can be particularly challenging when working with an outside funder with specific research expectations that may not be relevant to the goals and interests of participants.

How can you decide whether to involve participants in evaluation? Just as there are multiple models for ways to engage community members in participatory projects, there are several ways to involve them in evaluation. The LITMUS project in South London separated evaluation of community projects into three basic models: top-down, cooperative, and bottom-up.[13] Top-down evaluation is a traditional assessment strategy in which senior managers or external evaluators plan and manage evaluation. External evaluators also lead cooperative evaluation, but in this model, evaluators serve as guides, working with participants and project staff to develop assessment techniques and to collect and analyze data. In bottom-up models, external evaluators still facilitate the evaluation process, but their work is directed by participants and project staff to address their interests rather than institutionally driven measures of success.

Choosing the most effective way to engage participants in evaluation depends on several factors, including:

- Participant motivation. Are participants interested and willing to participate in evaluation?

- Participant availability. Are participants able to continue to contribute to the evaluation of projects after they are completed? Can the institution compensate them for their time in some way?

- Participant ability. Are participants sufficiently skilled and trusted in the community to help lead a fair evaluation of the project? Are the assessment indicators simple enough for amateur evaluators to measure them?

- Relevance. Are the evaluative goals and measures relevant to participants’ experience? Can they gain something from participating in evaluation? Will they be able to take new action based on their involvement?

- Transparency. Is the institution willing to open up evaluative processes to outside involvement? Will participants be able to distribute and use the results of the evaluation for their own purposes?

If the answer to many of these questions is “yes,” it might be appropriate to pursue cooperative or bottom-up evaluation instead of a traditional study. If the answer is mostly “no,” staff members can improve the potential for participatory evaluation in the future by improving the incidence of these indicators. For example, staff members might make traditional internal evaluations available for public use to enhance transparency, or they might work with participants to develop some questions for evaluation without including them in the entire process.

While it can be complex to execute, participatory evaluation encourages staff members to design measurement techniques that are actually useful—tools that they can use to improve the work for next time. When participants are invested in acting as researchers, they hold staff members accountable to the findings. Especially in long-standing partnerships—for example, consultative advisory boards—both the staff and community members should feel that research is helping enhance the project overall. Otherwise, why spend all that time on evaluation? In this way, participatory techniques can help make evaluation more beneficial to how cultural institutions function—not just in participatory projects, but across the board.

***

We’ve now looked at a range of techniques for planning, implementing, and evaluating participatory projects. In Chapter 11, we’ll focus inward and look at how institutional culture can impact which kinds of projects are most likely to succeed at different organizations. Sustaining participation isn’t just a matter of motivating visitors; it also requires developing management strategies that help staff members feel supported and enthusiastic about being involved.

Chapter 10 Notes

[1] For a comprehensive approach to outcome and impact measurement, you may want to download the British government report on Social ROI. For specific frameworks for impact assessment related to informal science projects, consult the NSF Frameworks for Evaluation Impact of Informal Science Education Projects here [PDF].

[3] This list came from the excellent InterAct report on Evaluating Participatory, Deliberative, and Co-operative Ways of Working, which you can download here [PDF].

[4] For a comprehensive resource bank of research and case studies related to public participation (with a focus on civic participation), check out this site.

[7] You can learn more about the study from Jeff Grabill, Stacey Pigg, and Katie Wittenauer’s 2009 paper, Take Two: A Study of the Co-Creation of Knowledge on Museum 2.0 Sites.

[9] See pages 145, 151, and 162 of the LSIE report for the discussion about the potential for scientific argumentation in museum settings.

[10] Visit the Powerhouse’s Make & Do website.

[11] For more information, consult Sebastian Chan’s January 2010 blog post, Let’s Make More Crowns, or the Danger of Not Looking Closely at Your Web Metrics.

[13] Read more about the LITMUS project in Section 5 of Evaluating Participatory, Deliberative, and Co-operative Ways of Working, available for download here [PDF].